Publications

Filter by type:

Click publication to expand details:

Functional architecture and mechanisms for 3D direction & distance in middle temporal visual area

Download Poster PDF

View Stimulus Demos

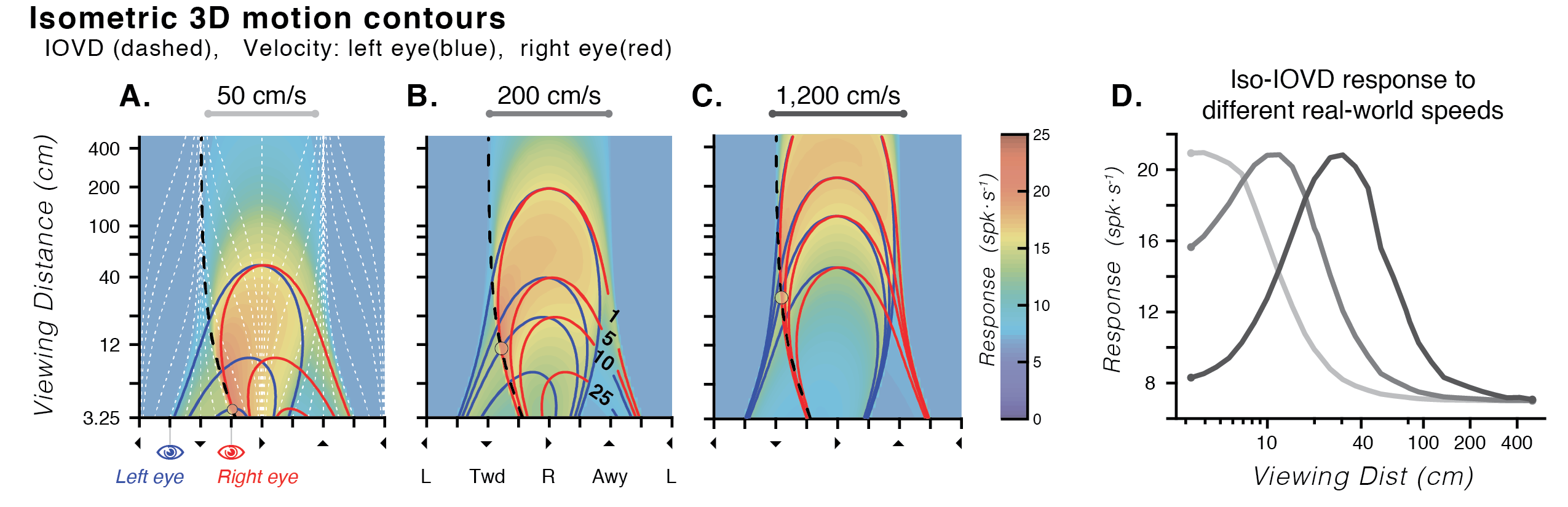

Experimental stimuli are often constrained to what can be presented on a flat monitor. While approximating retinal input in this way has laid the foundation for understanding mechanisms of visual processing, the majority of visual information encountered in the real world is 3D. Although cortical area MT has been one of the most thoroughly studied areas of the primate brain, recent evidence has shown that MT neurons are selective for not only 2D retinal motions, but also exhibit selectivity for 3D motion directions (Czuba et al., 2014; Sanada & DeAngelis, 2014). We've also shown that a model of MT that incorporates the projective geometry of binocular vision is predictive of human perceptual errors in 3D motion estimation (Bonnen et al., 2020). Importantly, perceptual errors and neural response predictions are strongly influenced by viewing distance.

I therefore measured the responses of MT neurons in awake macaque to binocular 3D moving dot stimuli rendered with full geometric cues using a motorized projection display that allowed for precise & dynamic control of physical viewing distance in a range of 30–120 cm. Tuning for 3D direction was widely evident in neurons and similar at different viewing distances (regardless of disparity tuning). Many neurons with 3D direction tuning changed in overall response level across viewing distances.

Moreover, orderly transitions were apparent between interdigitated regions of 3D and 2D selectivity across linear array recordings tangential to the cortical surface. Interestingly, regions of 3D selectivity were not necessarily co-localized with classic disparity selectivity. Robust selectivity for 3D motion & space in MT extends beyond simple interaction of known selectivities, and may reflect a transition of information from retinal input space to environmental frames of reference. This finding reinforces the importance of stimuli that more fully encompass both the geometry of retinal projection & statistical regularities of the natural environment.

Binocular Mechanisms of 3D Motion Processing

Abstract

The visual system must recover important properties of the external en- vironment if its host is to survive. Because the retinae are effectively two- dimensional but the world is three-dimensional (3D), the patterns of stim- ulation both within and across the eyes must be used to infer the distal stimulus—the environment—in all three dimensions. Moreover, animals and elements in the environment move, which means the input contains rich temporal information. Here, in addition to reviewing the literature, we discuss how and why prior work has focused on purported isolated systems (e.g., stereopsis) or cues (e.g., horizontal disparity) that do not necessarily map elegantly on to the computations and complex patterns of stimulation that arise when visual systems operate within the real world. We thus also introduce the binoptic flow field (BFF) as a description of the 3D motion information available in realistic environments, which can foster the use of ecologically valid yet well-controlled stimuli. Further, it can help clarify how future studies can more directly focus on the computations and stimulus properties the visual system might use to support perception and behavior in a dynamic 3D world.

Area MT Encodes Three-Dimensional Motion

Summary Poster PDF

Abstract

We use visual information to determine our dynamic relationship with other objects in a three-dimensional (3D) world. Despite decades of work on visual motion processing, it remains unclear how 3D directions-trajectories that include motion toward or away from the observer-are represented and processed in visual cortex. Area MT is heavily implicated in processing visual motion and depth, yet previous work has found little evidence for 3D direction sensitivity per se. Here we use a rich ensemble of binocular motion stimuli to reveal that most neurons in area MT of the anesthetized macaque encode 3D motion information. This tuning for 3D motion arises from multiple mechanisms, including different motion preferences in the two eyes and a nonlinear interaction of these signals when both eyes are stimulated. Using a novel method for functional binocular alignment, we were able to rule out contributions of static disparity tuning to the 3D motion tuning we observed. We propose that a primary function of MT is to encode 3D motion, critical for judging the movement of objects in dynamic real-world environments.

Spatiotemporal integration of isolated binocular three-dimensional motion cues.

Abstract

Two primary binocular cues—based on velocities seen by the two eyes or on temporal changes in binocular disparity—support the perception of three-dimensional (3D) motion. Although these cues support 3D motion perception in different perceptual tasks or regimes, stimulus cross-cue contamination and/or substantial differences in spatiotemporal structure have complicated interpretations. We introduce novel psychophysical stimuli which cleanly isolate the cues, based on a design introduced in oculomotor work (Sheliga, Quaia, FitzGibbon, & Cumming, 2016). We then use these stimuli to characterize and compare the temporal and spatial integration properties of velocity- and disparity-based mechanisms. On average, temporal integration of velocity-based cues progressed more than twice as quickly as disparity-based cues; performance in each pure-cue condition saturated at approximately 200 ms and approximately 500 ms, respectively. This temporal distinction suggests that disparity-based 3D direction judgments may include a post-sensory stage involving additional integration time in some observers, whereas velocity-based judgments are rapid and seem to be more purely sensory in nature. Thus, these two binocular mechanisms appear to support 3D motion perception with distinct temporal properties, reflecting differential mixtures of sensory and decision contributions. Spatial integration profiles for the two mechanisms were similar, and on the scale of receptive fields in area MT. Consistent with prior work, there were substantial individual differences, which we interpret as both sensory and cognitive variations across subjects, further clarifying the case for distinct sets of both cue-specific sensory and cognitive mechanisms. The pure-cue stimuli presented here lay the groundwork for further investigations of velocity- and disparity-based contributions to 3D motion perception.

Binocular viewing geometry shapes the neural representation of the dynamic three-dimensional environment.

Abstract

Sensory signals give rise to patterns of neural activity, which the brain uses to infer properties of the environment. For the visual system, considerable work has focused on the representation of frontoparallel stimulus features and binocular disparities. However, inferring the properties of the physical environment from retinal stimulation is a distinct and more challenging computational problem—this is what the brain must actually accomplish to support perception and action. Here we develop a computational model that incorporates projective geometry, mapping the three-dimensional (3D) environment onto the two retinae. We demonstrate that this mapping fundamentally shapes the tuning of cortical neurons and corresponding aspects of perception. For 3D motion, the model explains the strikingly non-canonical tuning present in existing electrophysiological data and distinctive patterns of perceptual errors evident in human behavior. Decoding the world from cortical activity is strongly affected by the geometry that links the environment to the sensory epithelium.

Decoding visual stimulus orientation from adapted neural populations

Download Poster PDF

Adaptation is a nearly ubiquitous feature of neural circuits. Although much is known about the effects of adaptation on the tuning of visual cortical neurons, a broad gap remains in understanding how adaptation alters population responses and the information they contain. It is also unclear whether the perceptual effects of adaptation can be explained simply by altered encoding, or whether the inappropriate decoding of adapted signals contributes.

To address these issues, we measured the responses of populations of neurons in anesthetized macaque primary visual cortex (V1) following adaptation to high-contrast dynamic orientation stimuli. Experiments were designed to address two primary questions: 1) How does adaptation alter the quality of visual information encoded in a neural population? 2) How might a system alter the readout mechanism(s) to accommodate the effects of adaptation?

We adapted neurons with a rapidly presented sequence of drifting gratings (~6° diameter, 80 ms/orientation, 2.4 s/trial). We varied the composition of orientations in the adaptation sequence, from a single orientation to a uniform distribution (0–180°). Brief test stimuli were presented after each adapter. These consisted of small drifting gabors (2.5° fwhm, 400 ms) of different orientations, embedded in dynamic pixel noise. We adjusted the pixel noise on the test stimuli to provide both easily discriminable and more challenging cases.

Adaptation had a pronounced effect on V1 population responsivity. We quantified the information about stimulus orientation in the population by measuring the performance of a linear classifier (SVM). Across adaptation ensembles, we found a substantial loss of information about stimulus orientation, relative to an unadapted baseline. Decoder performance decreased more after adaptation to a single orientation than to a uniform distribution of orientations. We tested whether the detrimental effects of adaptation could be overcome by re-training the classifier on the adapted responses. Surprisingly, in most cases performance deficits were not mitigated by re-training.

Our results suggest that adaptation reduces information about stimulus orientation in V1, and that this loss of information cannot be compensated for by using a decoder aware of the altered V1 responses. Ongoing experiments in awake primates performing a fine discrimination task will allow us to relate these findings to perceptual decision making, on a trial-by-trial basis.

Separate Perceptual and Neural Processing of Velocity- and Disparity-Based 3D Motion Signals

Abstract

Although the visual system uses both velocity- and disparity-based binocular information for computing 3D motion, it is unknown whether (and how) these two signals interact. We found that these two binocular signals are processed distinctly at the levels of both cortical activity in human MT and perception. In human MT, adaptation to both velocity-based and disparity-based 3D motions demonstrated direction-selective neuroimaging responses. However, when adaptation to one cue was probed using the other cue, there was no evidence of interaction between them (i.e., there was no "cross-cue" adaptation). Analogous psychophysical measurements yielded correspondingly weak cross-cue motion aftereffects (MAEs) in the face of very strong within-cue adaptation. In a direct test of perceptual independence, adapting to opposite 3D directions generated by different binocular cues resulted in simultaneous, superimposed, opposite-direction MAEs. These findings suggest that velocity- and disparity-based 3D motion signals may both flow through area MT but constitute distinct signals and pathways.

To CD or not to CD: Is there a 3D motion aftereffect based on changing disparities?

Abstract

Recently, T. B. Czuba, B. Rokers, K. Guillet, A. C. Huk, and L. K. Cormack, (2011) and Y. Sakano, R. S. Allison, and I. P. Howard (2012) published very similar studies using the motion aftereffect to probe the way in which motion through depth is computed. Here, we compare and contrast the findings of these two studies and incorporate their results with a brief follow-up experiment. Taken together, the results leave no doubt that the human visual system incorporates a mechanism that is uniquely sensitive to the difference in velocity signals between the two eyes, but--perhaps surprisingly--evidence for a neural representation of changes in binocular disparity over time remains elusive.

Three-dimensional motion aftereffects reveal distinct direction-selective mechanisms for binocular processing of motion through depth

Abstract

Motion aftereffects are historically considered evidence for neuronal populations tuned to specific directions of motion. Despite a wealth of motion aftereffect studies investigating 2D (frontoparallel) motion mechanisms, there is a remarkable dearth of psychophysical evidence for neuronal populations selective for the direction of motion through depth (i.e., tuned to 3D motion). We compared the effects of prolonged viewing of unidirectional motion under dichoptic and monocular conditions and found large 3D motion aftereffects that could not be explained by simple inheritance of 2D monocular aftereffects. These results (1) demonstrate the existence of neurons tuned to 3D motion as distinct from monocular 2D mechanisms, (2) show that distinct 3D direction selectivity arises from both interocular velocity differences and changing disparities over time, and (3) provide a straightforward psychophysical tool for further probing 3D motion mechanisms.

Motion processing with two eyes in three dimensions

Abstract

The movement of an object toward or away from the head is perhaps the most critical piece of information an organism can extract from its environment. Such 3D motion produces horizontally opposite motions on the two retinae. Little is known about how or where the visual system combines these two retinal motion signals, relative to the wealth of knowledge about the neural hierarchies involved in 2D motion processing and binocular vision. Canonical conceptions of primate visual processing assert that neurons early in the visual system combine monocular inputs into a single cyclopean stream (lacking eye-of-origin information) and extract 1D ("component") motions; later stages then extract 2D pattern motion from the cyclopean output of the earlier stage. Here, however, we show that 3D motion perception is in fact affected by the comparison of opposite 2D pattern motions between the two eyes. Three-dimensional motion sensitivity depends systematically on pattern motion direction when dichoptically viewing gratings and plaids-and a novel "dichoptic pseudoplaid" stimulus provides strong support for use of interocular pattern motion differences by precluding potential contributions from conventional disparity-based mechanisms. These results imply the existence of eye-of-origin information in later stages of motion processing and therefore motivate the incorporation of such eye-specific pattern-motion signals in models of motion processing and binocular integration.

Speed and eccentricity tuning reveal a central role for the velocity-based cue to 3D visual motion

Abstract

Two binocular cues are thought to underlie the visual perception of three-dimensional (3D) motion: a disparity-based cue, which relies on changes in disparity over time, and a velocity-based cue, which relies on interocular velocity differences. The respective building blocks of these cues, instantaneous disparity and retinal motion, exhibit very distinct spatial and temporal signatures. Although these two cues are synchronous in naturally moving objects, disparity-based and velocity-based mechanisms can be dissociated experimentally. We therefore investigated how the relative contributions of these two cues change across a range of viewing conditions. We measured direction-discrimination sensitivity for motion though depth across a wide range of eccentricities and speeds for disparity-based stimuli, velocity-based stimuli, and "full cue" stimuli containing both changing disparities and interocular velocity differences. Surprisingly, the pattern of sensitivity for velocity-based stimuli was nearly identical to that for full cue stimuli across the entire extent of the measured spatiotemporal surface and both were clearly distinct from those for the disparity-based stimuli. These results suggest that for direction discrimination outside the fovea, 3D motion perception primarily relies on the velocity-based cue with little, if any, contribution from the disparity-based cue.